What is Kinect?

Kinect is a motion-sensing device used for recognizing human movement. Kinect includes a 3D depth camera, RGB camera, and microphone array, making it highly effective for tracking body positions and movements. Today, Kinect is widely used in research fields and various HCI projects beyond gaming.

Purpose of a Virtual Environment

Using a virtual environment helps in managing project-specific dependencies independently. Each project can have its own set of libraries and versions, preventing conflicts with other projects and streamlining workflow.

To create and activate a virtual environment

- Create the virtual environment folder

- In the project directory, such as

D:\Users\Student\Desktop\kinect, create a virtual environment using a hidden folderpython -m venv .bonjour

- Activate the virtual environment

- Use this command to activate it

.\.bonjour\Scripts\activate

- Check if activated

- If activated successfully, the prompt will display

(student)before the directory path, indicating that the virtual environment is in use.

Kinect and Sensor Configuration

Kinect has multiple sensors that capture both image and depth data simultaneously. Data is stored in the project’s data folder, with image and depth data organized separately.

Examples of Kinect Functionalities

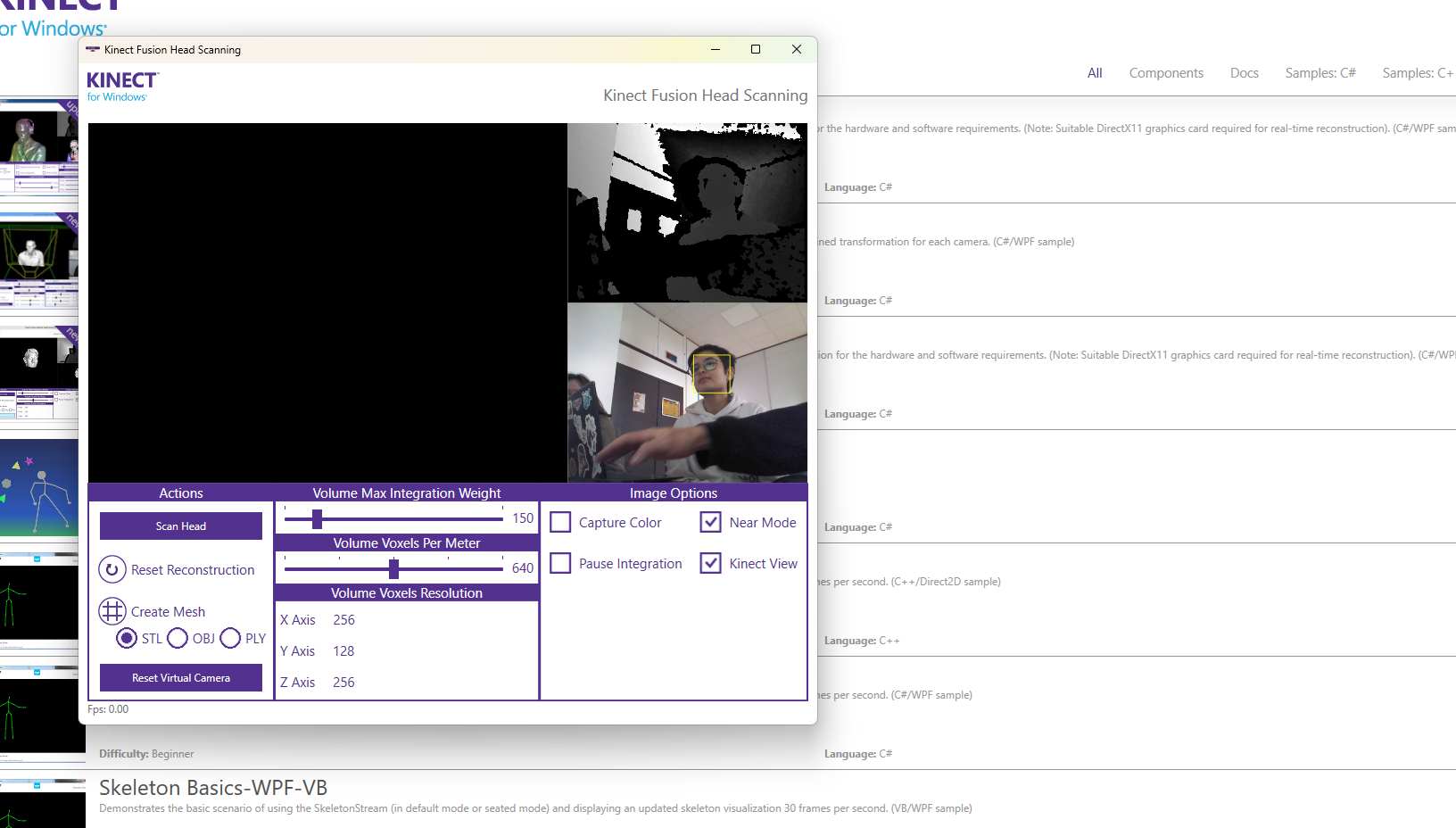

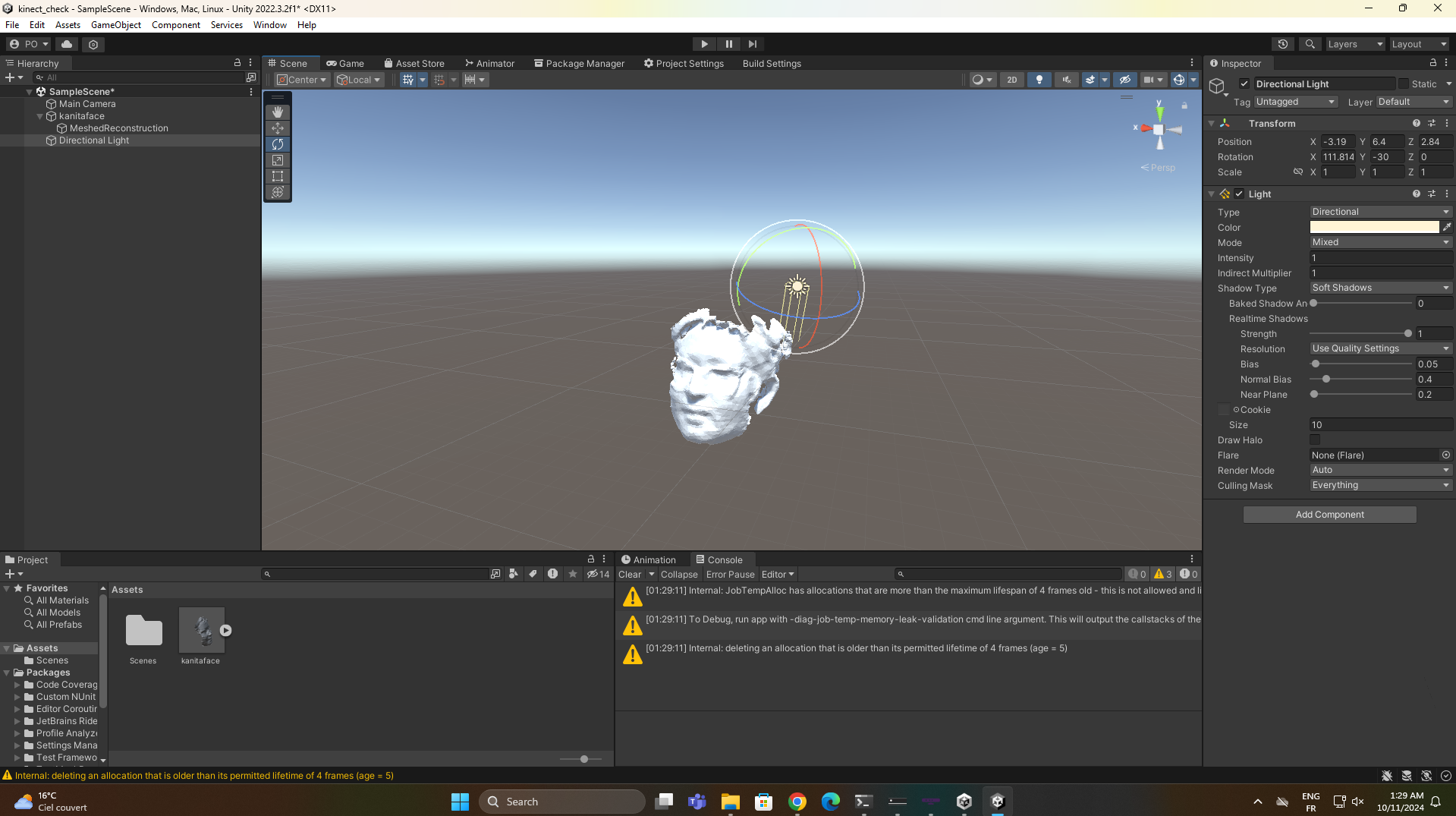

- Kinect Fusion Head Scanning

- As shown in the image above, Kinect Fusion allows 3D head scanning, which reconstructs a detailed 3D model of the user’s head. Users can adjust parameters like “Volume Max Integration Weight” and “Volume Voxel Resolution” to control the detail and quality of the scan.

- This functionality is useful for applications that require 3D head models, which can be exported as

.STL,.OBJ, or.PLYfiles for use in other software.

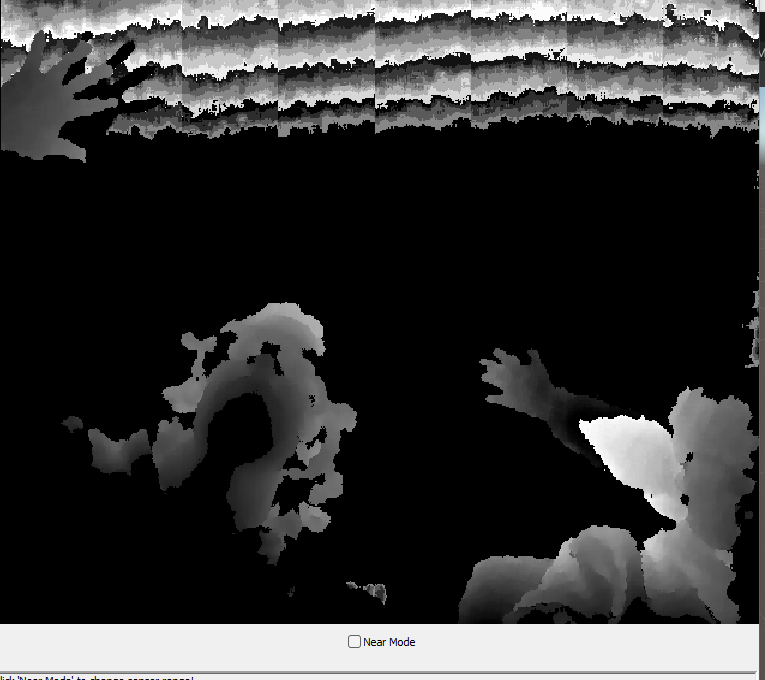

- Depth Sensing and Mapping

- This image illustrates Kinect’s depth sensing capabilities, where objects are visualized based on their distance from the sensor. Black areas represent regions farthest from the Kinect, while closer areas appear in lighter shades.

- This depth map allows for spatial awareness, essential for applications like gesture recognition and object tracking.

- Face Tracking

- This image showcs Kinect’s face tracking functionality. Here, Kinect detects the user’s face and maps key facial features using a network of lines, making it ideal for applications requiring facial recognition, facial expression analysis, or real-time face-driven animations.

Real-Time Data Collection and Processing

To initiate real-time data collection, run the real_time.py script:

python real_time.py

This script enables real-time detection of faces and bodies. It can be modified to add functionalities such as drawing bounding boxes around detected faces or adding other interactive elements.

Kinect SDK and Toolkit

In our project, we’re using the Kinect SDK and the Kinect Developer Toolkit. These tools give us access to Kinect’s main features, like motion tracking, depth sensing, and 3D scanning, making it easier to work with the Kinect sensor. The SDK includes useful sample projects, such as Skeleton Basics for tracking body movements.